Every year or so, another so-called revolutionary AI tool is announced that will supposedly completely reinvent the software industry. AI and machine learning are not new concepts, but only recently have developers been able to actually create learning algorithms that take multiple inputs or experiences and use those experiences to modify their output.

The most recent example of this long-time pipe dream is Copilot: a new AI tool from GitHub. Copilot is supposed to cut down on development time and allow developers to create entire chunks of code based only on semantic hints, code examples, and comments from devs themselves.

More importantly, there’s also plenty of evidence to suggest that Copilot could also introduce new security risks into organizations that adopt this AI coding tool. Today, let’s explore what Copilot is, what it may mean for the future industry, and the security risks you’ll have to remember when considering adopting this tool if it is ever released to the public.

What is Copilot?

Copilot is GitHub’s new machine learning coding tool. It was created thanks to a collaboration between GitHub and OpenAI and its code architecture is based on OpenAI’s Codex: a generative learning system trained on the English language and a variety of source codes taken from public repositories like GitHub.

In simpler terms, Copilot is a machine learning agent that can help developers make blocks of code based on semantic hints and other basic directions. In theory, this will help cut down programming time significantly, which should bode well for novice developers or those who have less experience. Remember, the average developer today has less than five years of total experience under their belt.

So far, Copilot has been trained on billions of lines of code, all from projects within GitHub’s development service. Copilot works by taking hints like source code, comments, and function names, then automatically completing the body of a given function with the most likely result. It’s all algorithmic-based, but this should allow Copilot to create code blocks for standard or rote code needs.

Over time, the hope is that Copilot will become so sophisticated that it will be able to create other, more sophisticated code blocks. Once it is fully operational, Copilot may be able to act as the “second half” of a paired programming team: one part human, one part machine.

How Effective is Copilot?

In a technical preview, Copilot was intended to predict the intent of a specific function using documentation strings, function names, comments, code entered by the developer, and other semantic hints. In theory, Copilot would put all of this information together and create code blocks that could be workable when integrated into an existing project’s codebase.

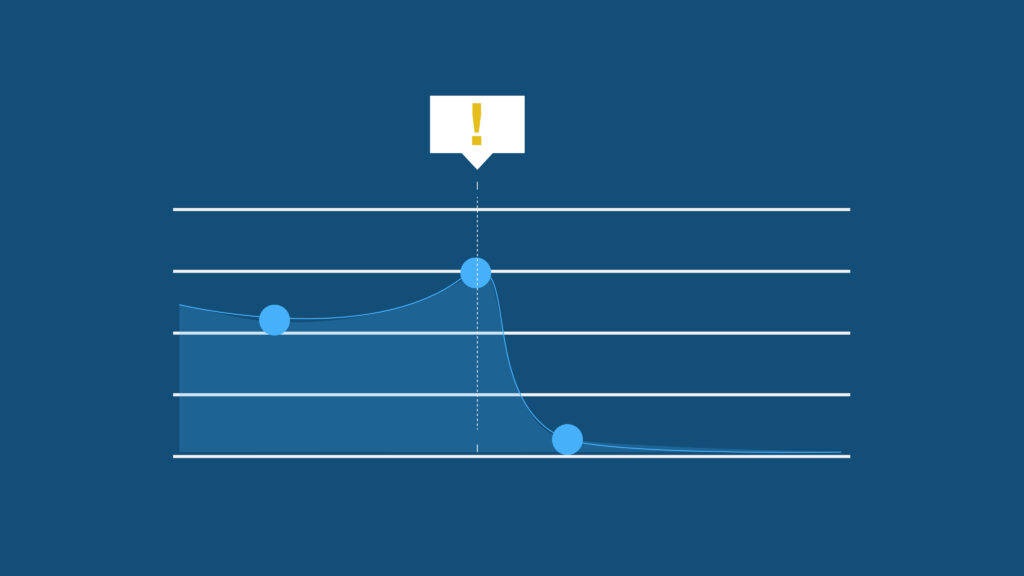

The technical preview had Copilot using Python functions. In this test, Copilot was able to guess the content correctly in about 43% of the demonstrated cases. Additionally, Copilot produced the correct code block in its top 10 most likely results in 57% of all cases.

At first glance, that doesn’t seem too inspiring. But given that Copilot is still in the early stages of development, the potential is very easy to see, even for those not familiar with the details of coding and development.

If Copilot was perfect, it might significantly cut down on coding time for major organizations, especially when their software projects have a lot of “standard” code without specific requirements or unique inclusions.

Copilot’s Effects on Security

All of Copilot’s benefits are attractive, to say the least. But they may also be moot if Copilot causes an issue with data protection and organizational security…and it very well might. As any modern development firm leader knows, cybersecurity is of paramount importance. Not only do you need excellent cybersecurity throughout your development pipeline and for your organization itself, but you also can’t adopt new tools that might break your carefully constructed safety net.

It’s very important to have at least one quality dev on your team who understands security. This developer could be hired from a competing firm or a freelancer, which may even be cheaper. You can expect to pay around $60 to $80 an hour for an experienced freelancer who knows what they’re doing.

But regardless of who you hire or what you pay, odds are your security officer(s) isn’t equipped to handle the introduction of a new, potentially volatile piece of software that might do more harm than good. This goes double when you consider how introducing new, potentially security compromising tools could affect your IT security team or labor force.

This also bodes poorly for tools like Copilot, which may introduce security flaws unintentionally that your security team is in no position to anticipate or correct due to their lack of experience.

Are the Benefits Worth the Risk?

Is it really a big deal if Copilot offers more value or a faster turnaround time for your coding projects?

Yes. The best software in the world is worthless if it has a lot of security flaws. Security is paramount in this day and age. Antivirus has to be airtight. You need a backup service for your crucial data. Your code can’t be vulnerable to network attacks.

Bottom line: Copilot might be an interesting tool, but its potential security flaws more than offset that value and should make you think twice before adopting it in your organization.

What Security Risks Can Copilot Cause?

The core security issue with Copilot is this:

- Copilot takes its so-called semantic hints from a variety of sources, including source code

- Lots of source code is public

- Public source code is notorious for bugs, references to outdated APIs, and coding patterns that are, to say the least, insecure

- If Copilot synthesizes code blocks using suggestions from this data, it might also synthesize the same vulnerabilities inherent in those code samples

Basically, if Copilot takes its cues from flawed code, the code it produces will necessarily be flawed as well.

However, this isn’t the only potential security risk introduced by Copilot. As Copilot is run by GitHub, any insecure code or personal data accidentally published to GitHub could be included in the code made by Copilot. Sure, this might be rare – but it is possible.

Lastly, all ML/AI tools run the same risk of being influenced by malicious factors or hackers unintentionally. Say that a company uses Copilot during its development process, but a disgruntled employee uses Copilot to skip oversight practices and integrate code with a backdoor or security flaw they can later exploit.

That employee can then possibly compromise the entire project, sell the information to a willing buyer, or introduce other security threats all because the company relied on automation too much rather than traditional human-based coding.

This is one reason why it’s vitally important to mandate that all of your employees at your business use virtual private networks (or VPNs) to encrypt all of their data when they are connected to company networks or dealing with company info. According to cybersecurity expert Ludovic Rembert from Privacy Canada, utilizing a VPN is no longer an option in today’s world.

“Aren’t VPNs obscure tools used by corporations, conspiracy theorists, criminals, and tech-savvy hackers? Not anymore,” says Rembert. “With privacy scandals, data leaks, geo-restrictions, and censoring making headlines and impacting lives every day, VPNs have risen to prominence as must-have services. Every piece of information you send over the internet, from pictures of your cat to your tax return, can potentially be intercepted.”

All in all, there’s a myriad of potential threats that Copilot and machine learning tools like it could introduce to any organization that adopts them.

Will Copilot Ever Be Adopted by the Wider Market?

It’s difficult to say. Problems with machine learning aren’t anything new. They’ve been tried for security, for programming, and even to improve commerce. While machine learning technologies hold a lot of promise for developers that try to reduce former abilities or programming time, the security risks have already been demonstrated in the past.

For example, 2016 saw Microsoft unveil a new chatbot called “Tay”. When Tay was unleashed on Twitter, it quickly conversed with anyone who sent it a message, using the interactions to fuel its algorithmic development.

Unfortunately, Tay was quickly pseudo-trained by the people it talked to. It began tweeting out offensive phrases or images in short order, requiring Microsoft to take the chatbot down immediately. This just goes to show how unrestricted Internet access can quickly train machine learning algorithms to do what isn’t intended.

Perhaps as a result of this experiment, Microsoft itself (and several other technology companies like MITRE) worked together to create a dictionary for possible adversarial attacks on machine learning or AI systems. Worryingly, it provided several examples of real attacks.

Bottom line: the threat to machine learning is already here. Copilot certainly isn’t ready for widespread adoption. Even worse, it may never be. It’s simply too soon to say.

Summary

In the end, Copilot is another novel approach toward integrating machine learning and AI technology to cut down on development time for programmers.

However, Copilot also introduces several security risks that could threaten your data, though not through any fault of its own. Instead, the very fact that it must take coding samples from flawed humans who produce flawed code may ultimately curtail its maximum potential.

Overall, Copilot might be best looked forward to as a possible means to accelerate dev times in certain areas. It will never be able to fully replace good coding practices, for example, and it’s likely to never replace your IT security team. Time will tell whether Copilot reaches its full potential or if it remains another failed experiment in the ML/AI sphere.

Whether you choose to use ML/AI-enabled tools like Copilot or not, backups of your repositories are non-negotiable. BackHub offers automated daily backups, granular data restores including metadata, and the option to sync backups to your own S3 storage. Start a free trial today.

Nahla Davies">

Nahla Davies">