Even though GitHub tries to provide enough storage for Git repositories, it imposes limits on file and repository sizes to ensure that repositories are easy to work with and maintain, as well as to ensure that the platform keeps running smoothly.

Individual files added via the browser IDE are restricted to a file size of 25 MB, while those added via the command line are restricted to 100 MB. Beyond that, GitHub will start to block pushes. Individual repositories, on the other hand, are capped to a maximum of 5 GB.

While it’s likely that most teams won’t run up against these limits, those who do have to scramble for a solution. For example, if you’re just uploading code, you won’t need to worry about this. However, if your project involves some kind of data, such as data science projects or machine learning analysis, then most likely you will.I

n this article, we’ll go over situations that can contribute to large repositories and consider possible workarounds—such as Git Large File Storage (LFS).

The Root of Large Repositories

Let’s cover a few common activities that can result in particularly large Git files or repositories.

Backing Up Database Dumps

Database dumps are usually formatted as large SQL files containing a major output of data that can be used to either replicate or back up a database. Developers upload database dumps alongside their project code to Git and GitHub for two reasons:

- To keep the state of data and code in sync

- To enable other developers who clone the project to easily replicate the data for that point in time

This is not recommended, as it could cause a lot of problems. GitHub advises using storage tools like Dropbox instead.

External Dependencies

Developers usually use package managers like Bundler, Node Package Manager (npm), or Maven to manage external project dependencies or packages.

But mistakes happen every day, so a developer could forget to gitignore such modules and accidentally commit them to Git history, which would bloat the total size of the repository.

Other Large Files

Aside from database dumps and external dependencies, there are other types of files that can contribute to bloating up a repository file size:

- Large media assets: Avoid storing large media assets in Git. Consider using Git LFS (see below for more details) or Git Annex, which allow you to version your media assets in Git while actually storing them outside your repository.

- File archives or compressed files: Different versions of such files don’t delta well against each other, so Git can’t store them efficiently. It would be better to store the individual files in your repository or store the archive elsewhere.

- Generated files (such as compiler output or JAR files): It would be better to regenerate them when necessary, or store them in a package registry or even a file server.

- Log and binary files: Distributing compiled code and prepackaged releases of log or binary files within your repository can bloat it up quickly.

Working with Large Repositories

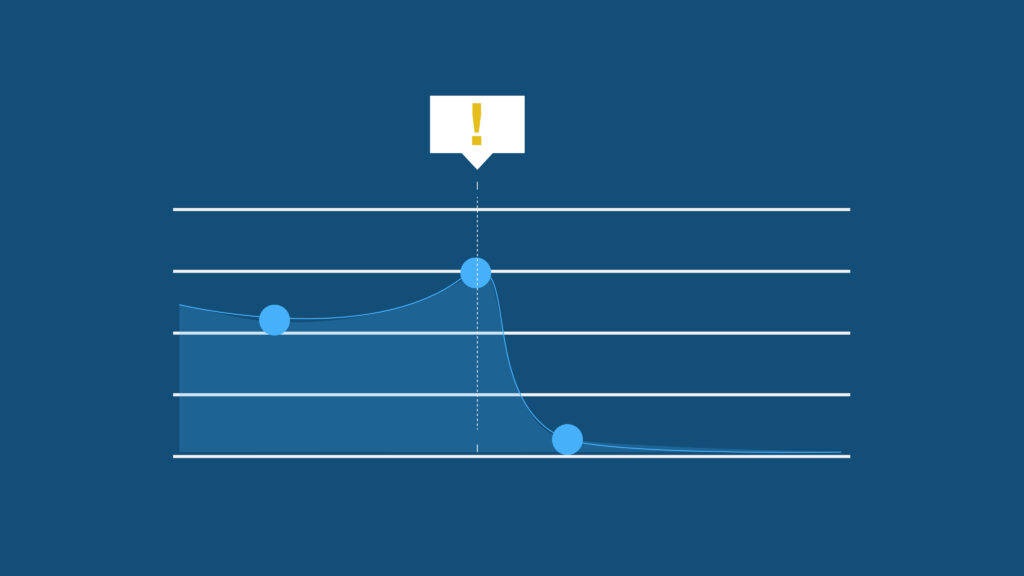

Imagine you run the command git push and after waiting a long time, you get the error message error: GH001 Large files detected. This happens when a file or files in your Git repository have exceeded the allowed capacity.

The previous section discussed situations that could lead to bloated Git files. Now, let’s look at possible solutions.

Solution 1: Remove Large Files from Repository History

If you find that a file is too large, one of the short-term solutions would be to remove it from your repository. git-sizer is a tool that can help with this. It’s a repository analyzer that computes size-related statistics about a repository. But simply deleting the file is not enough. You have to also remove it from the repository’s history.

A repository’s history is a record of the state of the files and folders in the repository at different times when a commit was made.

As long as a file has been committed to Git/GitHub, simply deleting it and making another commit won’t work. This is because when you push something to Git/GitHub, they keep track of every commit to allow you to roll back to any place in your history. For this reason, if you make a series of commits that adds and then deletes a large file, Git/GitHub will still store the large file, so you can roll back to it.

What you need to do is amend the history to make it seem to Git/GitHub that you never added the large file in the first place.

If the file was just added in your last commit before the attempted push, you’re in luck. You can simply remove the file with the following command:

git rm --cached csv_building_damage_assessment.csv (removes file)

git commit --amend -C HEAD (amends history)

But if the file was added in an earlier commit, the process will be a bit longer. You can either use the BFG Repo-Cleaner or you can run git rebase or git filter-branch to remove the file.

Solution 2: Creating Releases to Package Software

As mentioned earlier, one of the ways that repos can get bloated is by distributing compiled code and prepackaged releases within your repository.

Some projects require distributing large files, such as binaries or installers, in addition to distributing source code. If this is the case, instead of committing them as part of the source code, you can create releases on GitHub. Releases allow you to package software release notes and links to binary files for other people to use. Be aware that each file included in a release must be under 2 GB.

See how to create a release here.

Solution 3: Version Large Files With Git LFS

The previous solutions have focused on how to avoid committing a large file or on removing it from your repository. What if you want to keep it? Say you’re trying to commit psd.csv, and you get the too large file error. That’s where Git LFS comes to the rescue.

Git LFS lets you push files that are larger than the storage limit to GitHub. It does this by storing references to the file in the repository, but not the actual file. In other words, Git LFS creates a pointer file that acts as a reference to the actual file, which will be stored somewhere else. This pointer file will be managed by GitHub and whenever you clone the repository down, GitHub will use the pointer file as a map to go and find the large file for you.

Git LFS makes use of a method called lazy pull and fetch for downloading the files and their different versions. By default, these files and their history are not downloaded every time someone clones the repository—only the version relevant to the commit being checked out is downloaded. This makes it easy to keep your repository at a manageable size and improves pull and fetch time.

Git LFS is ideal for managing large files such as audio samples, videos, datasets, and graphics.

To get started with Git LFS, download the version that matches your device’s OS here.

- Set up Git LFS for your account by running

git lfs install - Select the file types that you want Git LFS to manage using the command

git lfs track "*.file extension or filename". This will create a .gitattributesfile. - Add the

.gitattributesfile staging area using the commandgit add .gitattributes. - Commit and push just as you normally would.

Please note that the above method will work only for the files that were not previously tracked by Git. If you already have a repository with large files tracked by Git, you need to migrate your files from Git tracking to git-lfs tracking. Simply run the following command:

git lfs migrate import --include="<files to be tracked>"

With Git LFS now enabled, you’ll be able to fetch, modify, and push large files. However, If collaborators on your repository don’t have Git LFS installed and set up, they won’t have access to those files. Whenever they clone your repository, they’ll only be able to fetch the pointer files.

To get things working properly, they need to download Git LFS and clone the repo, just like they would any other repo. Then to get the latest files on Git LFS from GitHub, run:

git lfs fetch origin master

Conclusion

GitHub does not work well with large files but with Git LFS, that can be circumvented. However, before you make any of these sensitive changes, like removing files from Git/GitHub history, it would be wise to back up that GitHub repository first. One wrong command and files could be permanently lost in an instant.

When you back up your repositories with a tool like BackHub (now part of Rewind), you can easily restore backups directly to your GitHub or clone directly to your local machine if anything should go wrong.

Linda Ikechukwu">

Linda Ikechukwu">